If you are managing a website and want to improve its SEO performance, understanding the role of robots.txt is essential. Being part of the Best Digital Marketing Academy in Bhiwani, Hisar, Chandigarh, Charkhi Dadri, Mahendragarh, Rohtak, and Loharu, we teach our students how to use robots.txt effectively for better search engine optimization.

In this blog, we’ll explain when and why to use robots.txt and how it can improve your website’s visibility while saving your crawl budget.

1. To Block Sensitive Pages from Crawling

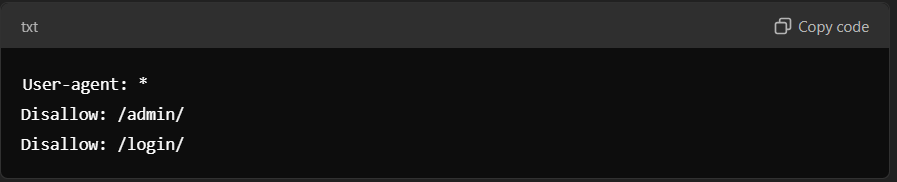

Robots.txt is a great tool for stopping search engines from indexing sensitive or private pages, like admin panels or login pages. For example:

User-agent: *

Disallow: /admin/

Disallow: /login/

This way, you ensure that unnecessary pages don’t appear in search results, keeping your focus on key pages.

2. Avoid Duplicate Content Issues

Search engines dislike duplicate content because it confuses their crawlers. If your site has similar pages with different URLs, robots.txt can block them to avoid SEO penalties. This is a major point we cover in our SEO module at the Best Digital Marketing Academy in Hisar and Rohtak.

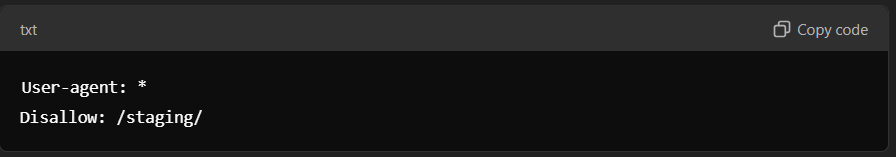

3. For Testing or Staging Websites

When working on a staging or testing version of your website, you can block it from being indexed until it’s ready for the live audience.

4. Block Specific File Types

Sometimes, you might not want certain file types like PDFs, images, or scripts to be crawled. Robots.txt lets you do that efficiently. Example:

This ensures search engines focus only on the important parts of your website.

5. Save Your Crawl Budget

Google and other search engines have a crawl budget for every website. By blocking irrelevant or low-priority pages, you can ensure crawlers spend their time on high-priority content, such as service pages or blogs.

6. Temporarily Block Pages

If you’re updating or creating new pages that aren’t ready, you can temporarily block them using robots.txt and allow access once the work is complete.

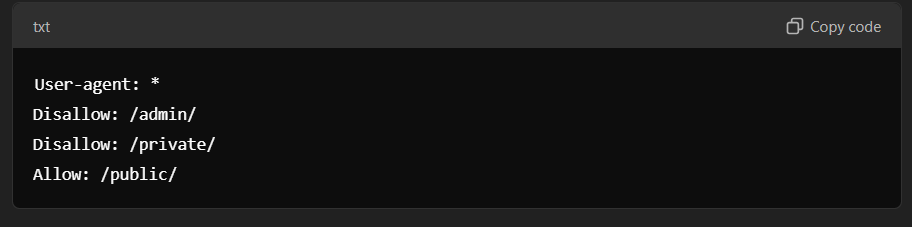

Example of a Simple Robots.txt File

Here’s a basic example of a well-structured robots.txt file:

Things to Remember About Robots.txt

- Robots.txt isn’t for security; use authentication for sensitive data.

- Not all bots follow robots.txt rules, so it’s not foolproof.

- Blocking essential pages by mistake can hurt your SEO, so be careful.

Conclusion

Understanding how to use robots.txt can significantly impact your website’s SEO performance. Whether you are running a business website or learning about SEO at the Best Digital Marketing Academy in Bhiwani, Hisar, Chandigarh, Charkhi Dadri, Mahendragarh, Rohtak, or Loharu, mastering robots.txt is crucial.

If you want to learn more about optimizing websites, growing your online presence, and becoming an SEO expert, join our classes today! Let’s take your digital marketing skills to the next level. 🚀

if you want to know more read official Google Article About Robot.txt and Searchengineland.com also you can visit

Why Robots.txt is Important for SEO: A Guide by the

If you are managing a website and want to improve its SEO performance, understanding the role of robots.txt is essential….

SEO Guides- Use Robots.txt file

Robot.txt file ka use tab karna chahiye jab aap apni website ke kuch specific parts ko search engine crawlers (jaise…

Welcome to the Best Digital Marketing Institute In Loharu, Bhiwani

Best digital marketing Institute In Loharu?What Will Be The Topics In Digital Marketing Course?Search Engine Marketing (SEM)Pay-per-click (PPC)Content MarketingEmail MarketingAffiliate…

Best Institute For Digital Marketing Course in Hisar-(DFA)

Digital Future Academy–Top 10 Ways of Why DFA is the Best Digital Marketing course in Hisar. Learn how to make…

BEST DIGITAL MARKETING ACADEMY IN BHIWANI

If you are looking for best courses for digital marketing in Bhiwani, then you are in the right place, Our…

AI BASED BEST DIGITAL MARKETING ACADEMY IN Hisar – DFA

Hisar is a developing town which is making great progress in education and Technology. In today’s world, everyone is taking…